Introduction

As an important part of Chinese traditional culture, seal cutting carries the national spirit and cultural connotation. The Seal Cutting Display System aims to solve the problem of the lack of popularization of seal carving education in the post-epidemic era, promote the knowledge of seal carving in the form of offline interactive exhibition, and help school students and exhibitors to understand the culture and history of seal cutting and experience the charm of seal characters. It has a high value in the popularization of science.

The seal cutting display system is able to bring a more powerful experience to users compared to traditional exhibitions. The system is displayed in an offline technology exhibition, connecting iPads, interactive large screens, and mobile devices (mobile or otherwise) through the Web. With this platform, users can have real-time interaction with the big screen and experience the fun of interacting with the bubble components in the online physical world created. At the same time, photos are taken and processed by the system to get a page marker, which helps users understand the connection between human action and seal characters while keeping a memory of the experience for them.

The seal cutting display system is introduced with verbs, allowing users to unlock a limited range of content. The following 12 words are included: "paw", "swing", "strike", "lift", "lift "straddle", "climb", "run", "ride", "shoot ", "kick", "throw", "swim".

Responsibilities

· Determined the implementation of the system at the early stage of development, and the way of communication between multiple devices.

· Introduced Matterjs to build the front-end physical system, realized the bubble interaction function and logic, modified and optimized the interface performance based on the design requirements.

· Responsible for the front-end development of the display interface.

· Studied the implementation principle of target detection, introduced technical interface and created a personal data set.

· Built database, created Django server, and deployed the web page online.

· Worked closely with designers and project leaders to modify and improve project

Architecture

The B/S architecture was chosen for the development of the system. The front-end development uses HTML5, CSS and JavaScript, and the JS part is mainly developed using the jQuery library, while several packaged JS libraries such as Tensorflow.js and Matter.js are used to assist in realizing the functional requirements within the project. The back end mainly uses the Flask web application framework to implement related APIs.

Development division of labor

During the development of the seal cutting display system, the work I completed mainly included designing the module functions of single key and bubble interaction; front-end static interface development and physical engine interface; back-end framework construction and image processing function implementation; cloud server deployment; model training, etc.

Introduction of modules

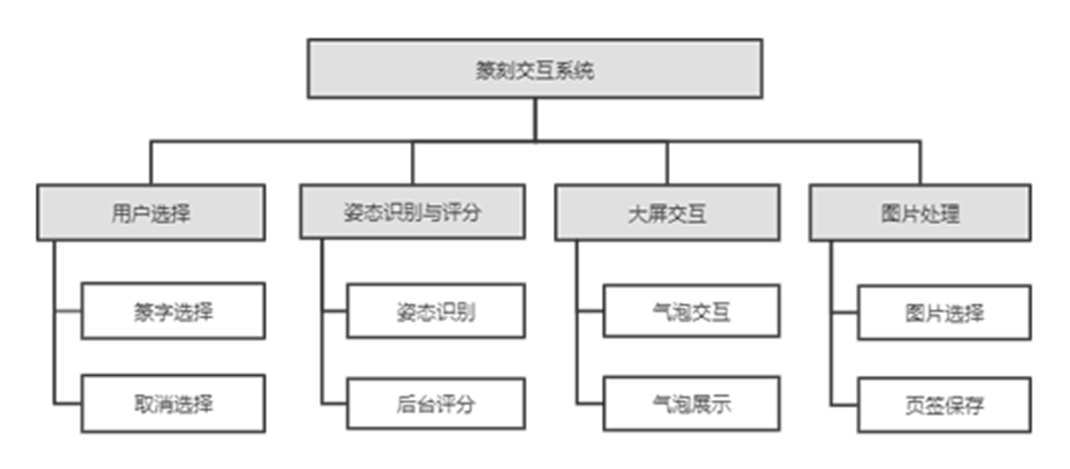

The seal engraving interactive system contains four modules: user selection, gesture recognition and scoring, large screen interaction and image processing. The main contents are as follows.

(1) User selection module

It contains the functions of selecting and deselecting seal characters.

Obtain user-selected seal characters and design the signal transmission method and logic of seal character selection in combination with the database. Launch the corresponding unlocking process of the seal character for the user's selection--design CSS animation to increase user interface performance.

(2) Posture recognition and scoring module

It includes pose recognition and background scoring functions.

Perform gesture recognition for images taken by users, design gesture recognition scoring criteria based on gesture recognition, realize background scoring of user's action standard, and return evaluation or guides users to try again for recognition based on scoring.

(3) Large screen interaction module

It includes bubble interaction and bubble display functions.

According to the user's unlocked seal character to generate the corresponding bubble components, using the front-end physics engine related technology to design the bubble structure and drawing performance, to achieve bubble component generation, disappearance, collision, regular movement, fusion and interaction with the user and other functions, designed to enhance the user interface components expressive power.

(4) Page Marker generation module

It includes image selection and saving functions.

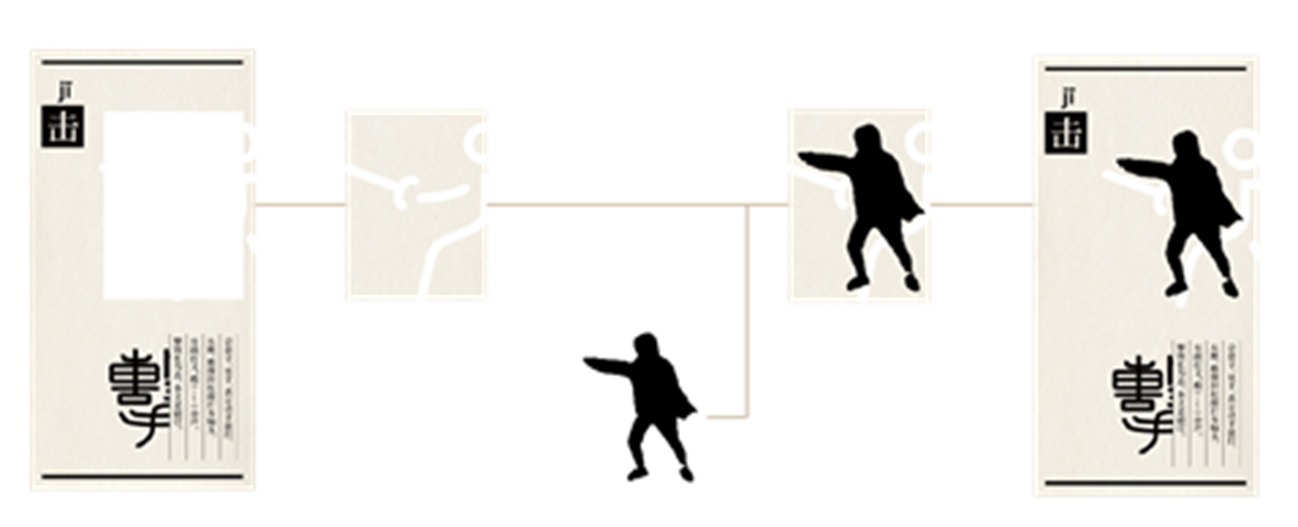

The image processing is carried out for the images taken by users, and the image processing process including target detection, portrait keying and other functions is designed in conjunction with the database to realize the function of generating customized tabs based on user images.

Principle of implementation

Front-end physics engine

The front-end physics engine provides a physics system close to everyday life by pre-simulating variables such as mass and velocity of objects, giving realistic physics effects to rigid, soft and other components, allowing them to simulate various types of everyday physical performance, such as floating effects supported by the buoyancy of water bodies, motion effects by spring force, etc. The front-end physics engine enables realistic simulation of physics effects, making the entire physics system infinitely closer to the real world. Front-end physics engine library can help front-end developers who lack in-depth understanding of physics to complete various development tasks.

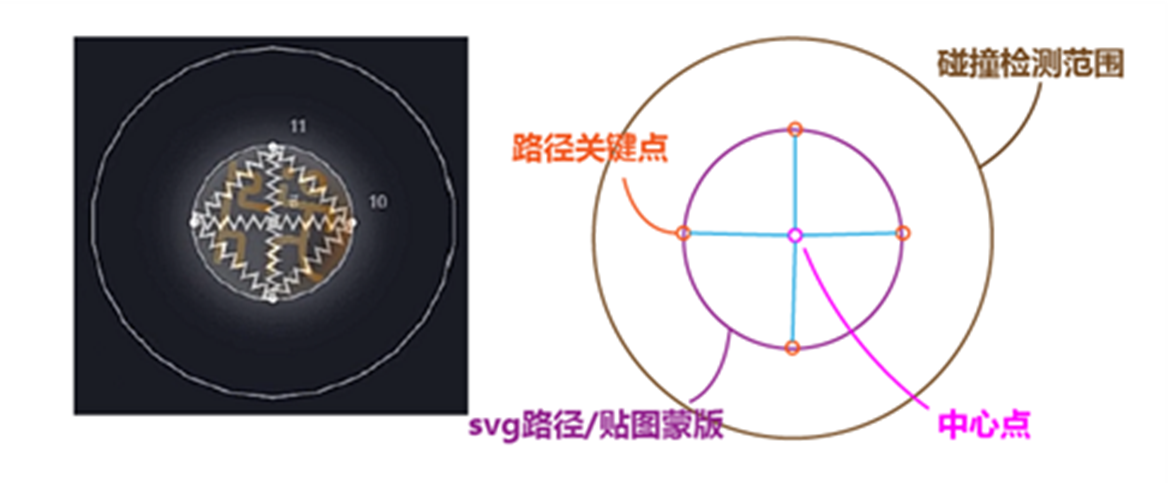

Matter.js is a web-based 2D graphics engine that presents physics effects to the user interface through an engine, world environment and renderer. The engine is the simulation controller that manages and updates the world and is able to detect and control events such as collisions and triggers of object components, which are created and manipulated by the Matter.Engine module. The world environment can be seen as a composite container for holding and managing complex objects with multi-part representations, such as the objects themselves, constraints or other complex objects, and is now left to the control of the Matter. Renderer module is a simple canvas-based renderer that can support content such as drawing vectors, control view layers and help developers with debugging, etc.

Image matting

Image matting refers to the extraction of a portrait as a foreground from a complex background. At present, the technology of portrait keying has become more mature, and it has good effect on hair part and noisy images. Portrait keying can be divided into two categories according to processing methods: traditional methods and deep learning, and two categories according to interactivity, requiring human interaction and not requiring human interaction.

Target Detection

The target detection algorithm outputs an enclosing frame to delineate the range of objects of a specified category in the image, which is widely used in the fields of medicine, agriculture, and transportation, and has practical applications in lesion detection, pest detection, and traffic surveillance cameras. The algorithms can be classified into two categories: one-stage algorithms and two-stage algorithms. One-stage algorithms include YOLO algorithm, SSD algorithm, etc., also known as regression-based target detection algorithms. Faster R-CNN and other R-CNN series algorithms are the representatives of the two-stage algorithms.

Technical implementation details

Front-end physics effects

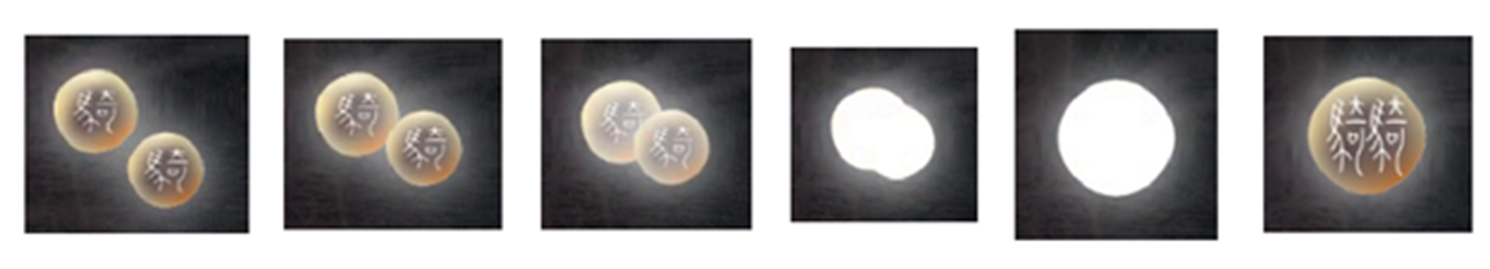

Matter.js (front-end physics engine) is used to build the physical world in the large-screen interaction module, forming bubble components that conform to the laws of physics and pre-defined motion logic. Since Matter.js supports user input to control the components, during the actual presentation of the project, users can interact with the bubbles through the touch screen and apply external force to the bubble components to enrich the fun of the experience. The bubble is generated after the successful unlocking of the corresponding seal character. At this point, users can watch a series of bubbles from generation to disappearance in the bubble display. The bubble is generated from the middle of the screen, randomly selects a preset trajectory to rise, and stops rising when it touches the top. 500s later, the bubble continues to rise and leaves the screen, and disappears after a short time. During the active bubble cycle, users can use the interactive screen to drag the bubble position or let the bubbles collide with each other to form a combination of seal characters.

Combined application of Paper.js and Matter.js, the key point in Paper as the base component of the physics engine, and use the constraint component in Matter (Matter.Constraint) to connect the key point component, so that when the collision of the composite component occurs, the force effect on each part of the component will be due to the properties and position of the constraint component, so that when the collision of the composite component occurs, the force effect of each part of the component will change due to the properties and position of the constraint component, thus producing a small deformation in the bubble collision effect, as shown in Figure 4.5, which can increase the bubble expression and more realistic.

The actual project, combined with the traditional bubble fusion effect implementation ideas, through the sequence of frame animation and halo effect to simulate the bubble fusion effect.

Page marker generation and image processing process

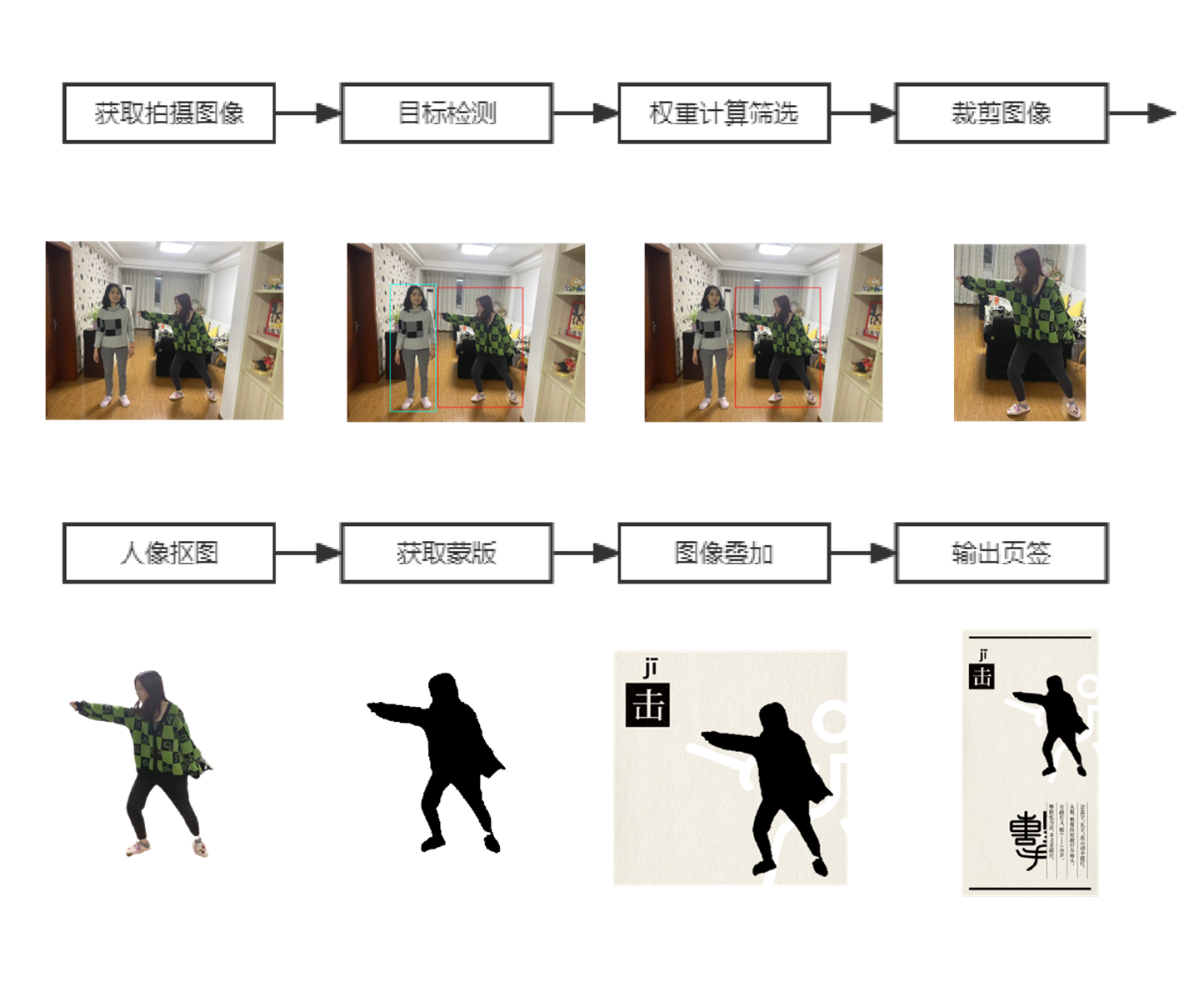

Get the captured image get the captured image and the corresponding action id from the database

Target detection identify the portrait in the image

Weight calculation and filtering weight calculation based on the size of the recognition frame and the distance to the middle of the image, and filtering the target portrait recognition frame in reverse order according to the weight value

Crop the image crop to get the corresponding recognition frame area

Key the portrait remove the background

Get the mask extract the pure black silhouette of the portrait (masked area)

Image overlay overlay with the corresponding id background image

Output tab user can save the generated tab as a memento

More images